Software Is Using Us Now

From humans using software, to software using humans…

In the world we're leaving behind, the model of technology adoption was simple: Humans use software.

We started with the brute-force era — where software worked, but barely. Awkward interfaces. Clunky logic. We knew there was a powerful feature somewhere, but we didn't know where to click or find the button.

We tolerated it because we didn't have anything better.

Then we entered the golden age of SaaS.

Product teams built tools that adapted to human needs. Interfaces were thoughtfully crafted. UX designers obsessed over making things intuitive, beautiful, frictionless.

Everything revolved around encouraging human usage. We were at the center of the system.

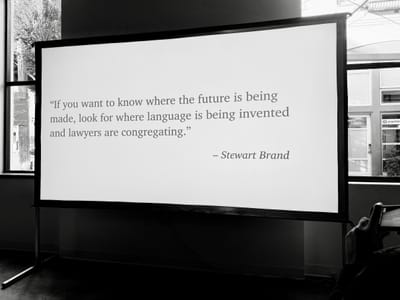

But we're now experiencing a fundamental shift.

We're entering a new paradigm — where software, specifically AI agents, uses humans.

This new version of software doesn't just wait to be used — it initiates. It acts. And sometimes, it needs us.

Today's companies are increasingly AI-native, agent-led. Customer interactions are now fronted by AI systems that can engage, respond, reason, and act. What used to be rigid and mechanical — chatbot logic trees and FAQ bots — is now fluid, contextual, human-like intelligence.

And when agents reach the limits of what they can do, they don't stop.

They escalate.

This shift has a name: Human In The Loop.

It marks the moment when software calls on us — not to use it, but to help it.

To verify something.

To navigate ambiguity.

To make a judgment.

To approve or reject.

And in doing so, to take accountability.

Because that's what agents cannot do yet — take ultimate responsibility.

For now, we're dealing with this sliding scale of autonomy — where AI agents handle what they can, and humans step in where needed.

Over time, AI systems will grow more sophisticated, increasingly capable... more autonomous. And as they do, they'll "use" us differently. We'll be called upon to deal with increasingly complex layers of decision-making, provide fail-safes, and become extensions of AI reasoning.

Before long, we might only be needed for high-stakes negotiations, ethical judgment calls, or truly inventive work.

This new generation of software is not built on the paradigm of "what can I do for a human as they complete their task?" But "what can a human do for me to complete my task?" It asks: How far can I go on my own, and where do I need a human in the loop?

And so we must change our approach to design. We're not just improving how software works. We're rethinking who it works with — and how.

The most effective systems won't just assist humans — they'll rely on us.

We need to build with orchestration in mind.

We need to design interfaces not just for end-users, but for AI agents to have human teammates, human subordinates.

And whatever we build will not be constrained to an app or a platform, but will infiltrate the realm of humans — emailing us, texting us, calling us, watching us, popping up on our feeds.

This isn't about software asking, how can I serve the human? It's about asking, how can the human serve the mission?

This evolution will be fascinating. Because what's really happening is that in this new world, we humans aren't just users — we're part of the infrastructure to be used.